How to Implement LRU Cache

Question about LRU cache is one of the broadly used questions in a coding interview. What is the LRU cache? The Least Recently Used (LRU) cache is caching strategy. In LRU, if the cache is full, the element that hasn't been used for the longest time will be evicted from the cache. Let's see an example.

For instance, if we have a cache with a capacity of three items. Initially, the cache is empty.

Let's put 4, 6, 5 in the cache. After completing these actions, the cache is full and the least recently used element is 4.

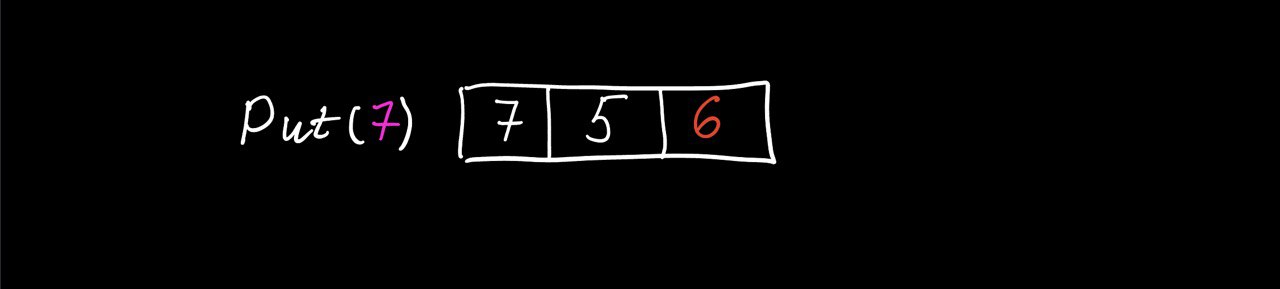

Let's put one more element 7. The cache is full, so we evict the least recently used element 4, after evicting 4 the new least recently used element is 6 and we got a space for 7.

LRU cache supports the put operation and supports the get operation. The get operation returns a value (if exists) and moves just returned element to the top of the cache. Let's get 6 from the cache. The get operation returns 6 and because 6 just has been used, the new recently used element is 5.

Before we implement the LRU cache, it's good to know some requirements:

All operations should run in order of O(1)

- the cache has a

limitedcapacity - if the cache is full, adding a new item must invoke the

LRU strategy

Basic implementation

To implement the LRU cache, we need to be able to:

- return a value by key

- support the least recently used element

To return a value by key, we could use a Dictionary/HashMap, and for supporting the least recently used element, we could use a LinkedList.

private Dictionary<int, string> _cache = new Dictionary<int, string>();

private LinkedList<int> _keys = new LinkedList<int>();

private int _capacity;

public LRUCache(int capacity)

{

_capacity = capacity;

}

Put operation

First of all, we're checking if a key is in the cache, and if so, we move the key to the top and refresh the value.

if the key not in the cache and the cache is full, we evict the least recently used element and put the new element in the cache.

public void Put(int key, string value)

{

if (_cache.ContainsKey(key))

{

_keys.Remove(key); // O(n)

}

else

{

if (_cache.Count == _capacity)

{

// evict least recently used element

_cache.Remove(_keys.Last.Value);

_keys.RemoveLast();

}

}

_keys.AddFirst(key);

_cache[key] = value;

}

The implementation is pretty straightforward, but there's one downside - time complexity. Time complexity is O(n) because of _keys.Remove(key) operation. _keys is a LinkedList, and Remove takes O(n) time because we have to traverse the full linked list to remove an element.

Get operation

If a key exists, we return the value and move the element to the top of the cache, otherwise, return No value

public string Get(int key)

{

if (_cache.ContainsKey(key))

{

_keys.Remove(key); // O(n)

_keys.AddFirst(key);

return _cache[key];

}

return "No value";

}

The time complexity is the same - O(n) because of _keys.Remove(key)

Full basic implementation

public class LRUCache

{

private Dictionary<int, string> _cache = new Dictionary<int, string>();

private LinkedList<int> _keys = new LinkedList<int>();

private int _capacity;

public LRUCache(int capacity)

{

_capacity = capacity;

}

public string Get(int key)

{

if (_cache.ContainsKey(key))

{

_keys.Remove(key); // O(n)

_keys.AddFirst(key);

return _cache[key];

}

return "No value";

}

public void Put(int key, string value)

{

if (_cache.ContainsKey(key))

{

_keys.Remove(key); // O(n)

}

else

{

if (_cache.Count == _capacity)

{

_cache.Remove(_keys.Last.Value);

_keys.RemoveLast();

}

}

_keys.AddFirst(key);

_cache[key] = value;

}

}

The time complexity for naive implementation is O(n)

Summary

An LRU cache is an efficient cache data structure with time complexity O(1)

LRU Cache implementation

We saw how to write basic implementation. Let's think about how to make it better. The naive LRU cache works fine with one disadvantage - time complexity, and the root cause is the remove method in the linked list. You could ask, "wait a minute, why does remove takes O(n)? It should take O(1)". Yes, it should. Let's see why it takes O(n) instead of O(1).

For example, here's our linked list, and we want to delete 5. To delete a value, first, we need to find it, and the search takes O(n).

The search returns the linked list node, and to delete the node, we need to update the next and previous references. The deletion takes O(1). Aha! We need to eliminate the search to improve our basic implementation.

What if instead of storing a key and value in our dictionary, we would store LinkedListNode? In this case, we don't need to search for a linked list node. We could get it from the dictionary with O(1) and then do deletion with O(1).

private Dictionary<int, LinkedListNode<Item>> _cache = new Dictionary<int, LinkedListNode<Item>>();

private LinkedList<Item> _list = new LinkedList<Item>();

private int _capacity;

public LRUCache(int capacity)

{

_capacity = capacity;

}

Put operation

The logic behind of new put operation is the same as the previous one, with only one difference, we're doing the remove on the linked list node, and as a result, the operation takes O(1).

public void Put(int key, string value)

{

if(_cache.ContainsKey(key))

{

LinkedListNode<Item> existingNode = _cache[key];

_list.Remove(existingNode); // O(1)

}

else

{

if (_cache.Count == _capacity)

{

// evict least recently used element

_cache.Remove(_list.Last.Value.Key);

_list.RemoveLast();

}

}

Item cacheItem = new Item(key, value);

LinkedListNode<Item> node = new LinkedListNode<Item>(cacheItem);

_list.AddFirst(node);

_cache[key] = node;

}

The time complexity is O(1), and it's much better compared with O(n).

Get operation

The get operation almost identical to the basic version

public string Get(int key)

{

if(_cache.ContainsKey(key))

{

LinkedListNode<Item> node = _cache[key];

_list.Remove(node); // O(1)

_list.AddFirst(node);

return node.Value.Value;

}

return "No value";

}

Because of linked list node usage, the time complexity is O(1).

Full LRU cache implementation

public class LRUCache

{

private Dictionary<int, LinkedListNode<Item>> _cache = new Dictionary<int, LinkedListNode<Item>>();

private LinkedList<Item> _list = new LinkedList<Item>();

private int _capacity;

public LRUCache(int capacity)

{

_capacity = capacity;

}

public string Get(int key)

{

if(_cache.ContainsKey(key))

{

LinkedListNode<Item> node = _cache[key];

_list.Remove(node);

_list.AddFirst(node);

return node.Value.Value;

}

return "No value";

}

public void Put(int key, string value)

{

if(_cache.ContainsKey(key))

{

LinkedListNode<Item> existingNode = _cache[key];

_list.Remove(existingNode);

}

else

{

if (_cache.Count == _capacity)

{

_cache.Remove(_list.Last.Value.Key);

_list.RemoveLast();

}

}

Item cacheItem = new Item(key, value);

LinkedListNode<Item> node = new LinkedListNode<Item>(cacheItem);

_list.AddFirst(node);

_cache[key] = node;

}

private class Item

{

public Item(int key, string value)

{

Key = key;

Value = value;

}

public int Key;

public string Value;

}

}

Summary

In this tutorial, we learned what an LRU cache is. We saw how to implement the basic version and how it can be improved to achieve O(1) time complexity.